AI has rapidly evolved to change everything around us—from chatbots serving customers to even complex algorithms predicting which movies or products you may like.

However, amidst all these rapid developments, one glaring problem seems to keep cropping up: the AI black box problem. You might ask yourself, what is this so-called “black box problem”? And why does it matter? We will make it simple for you in this article.

What is the AI Black Box Problem?

Imagine having some device into which you put something, it works on it in some incomprehensible ways inside, and voilà—it gives you an output. You do not know what happens inside nor is there any possibility to peek inside.

That is the “black box.” Now, every time we refer to the AI black box problem, it means that AI systems make many of their decisions for reasons not really understandable to even the people who built it.

That is, AI systems generate decisions which no one can really explain; this lack of transparency is a big concern from the users of AI products right through to the policymakers and researchers themselves.

How does the AI Black Box Work?

Now, let’s try to deconstruct how an AI black box might work. Most of the current AI—especially those tapping into either machine learning or deep learning—involves some quite complex algorithms that analyze heaps of data and learn patterns inside.

For example:

- You expose the AI to thousands of pictures of cats and dogs.

- It learns to make a difference between cats and dogs according to the data it is fed with.

- Later, it can say, when it is shown a new picture: “That’s a cat!” or “That’s a dog!”

But here is the catch: while the AI gives you the correct answer, how it decided upon that—it hasn’t a clue.

The process inside of the AI’s “brain” is therefore opaque to us; hence a black box. Experts who even build such systems in some cases cannot explain why the AI made a certain decision.

Why is the AI Black Box a Problem?

You might say, “If it is giving the right answers, why should I care how it works?” Of course, AI yields perfect results for most cases, but the black box brings a lot of issues along with it, such as:

Lack of Trust and Accountability

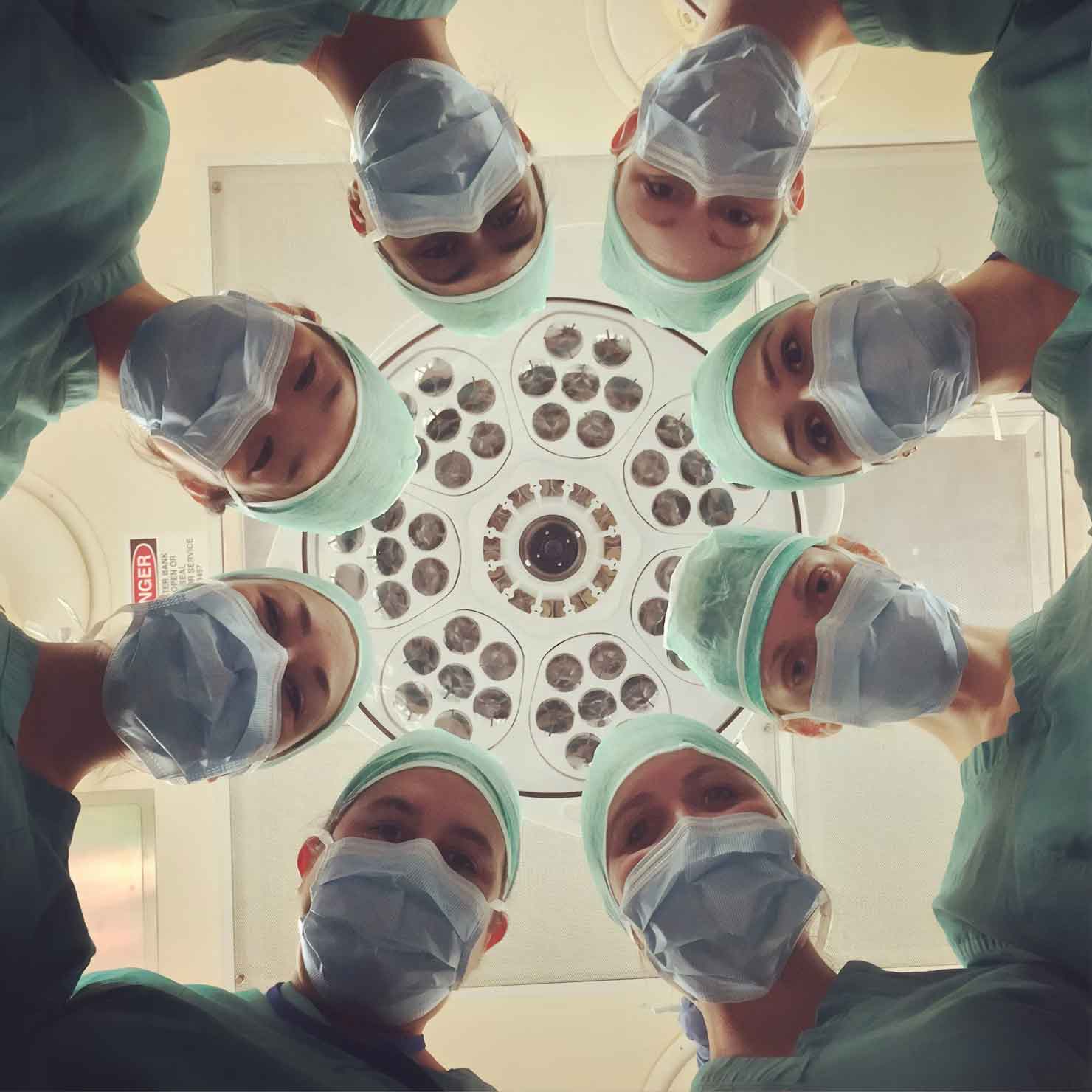

The moment decisions start being made that affect people, such as approving a loan, diagnosing an illness, or suggesting a sentence, we need to be able to trust them.

How can you trust a decision if you do not know how or why it was made? Just think of not having been approved for a loan and the bank cannot explain why—that lack of explanation erodes trust and raises concerns over fairness.

Bias and Fairness

AI learns from the data they get. If the data is biased, then it reflects on AI also. For example, an AI system faced with facts that men get high-paying jobs may start giving precedence to male candidates while recommending a job.

Since there is a lack of transparency as to how decisions are made, these biases are not that easy to identify and fix.

Legal and Ethical Challenges

Most of the industries, such as health care and finance, require decisions to be strictly governed by rules and regulations.

But how do you regulate something when you don’t understand it? In this respect, the black box nature of AI will make it very hard to tell whether or not decisions are legal and ethical. For example, if an AI misdiagnoses a patient, at fault should be the doctor or the AI system?

How Do We Overcome the AI Black Box Problem?

Now that we have discussed the problem, let’s talk about the solution. While tricky, it is not impossible to overcome the problem of the black box in AI.

As a matter of fact, several researchers and developers work hard and come up with ways to make AI systems more transparent and understandable. Here’s how the issue can be overcome:

Explainable AI (XAI)

Explainable AI, or simply XAI, is a sub-discipline of study consecrated to making AI systems explain their decisions. A truly explainable AI system would not just give the answer but would show its work to get to that answer.

It would, for example, if diagnosing a patient to have some sort of illness, explain also from which symptoms or pattern in the data the particular diagnosis comes from. This way, AI becomes more transparent and creates trust.

Simpler Models

Some would say instead we should be developing more simple forms of AI that we can actually understand. Of course, the most powerful models, which right now are deep learning networks, are also the least explainable.

Simpler models will not be as powerful but more transparent; that is we actually can understand how they make decisions.

Auditing and Testing

Others include periodic auditing and testing of the AI machines in terms of bias, precision, and fairness.

Problems can be precluded through reviewing how AI machines make their decisions. Testing and auditing regularly will ensure that AI machines are performing their duties reliably while making fair decisions.

Regulations and Policies

Because of this, governments and organizations at large are formulating policies and regulations that can handle the problem of the AI black box.

For example, the European Union has tabled laws that ensure AI systems are explainable and transparent in areas where high stakes are involved, such as healthcare or finance.

Why Should You Care About the AI Black Box Problem?

You may not be a computer scientist, but again, it isn’t as though the AI Black Box Problem is some kind of plague spread across the face of the earth.

By the huge way that AI systems will be put into place, they tend to impact many aspects of our life—from the ads you see online down to the products you buy, even how your data will be used.

That would be good, to form some idea about how these systems do or do not work, in order to enable informed choices on the use of such systems.

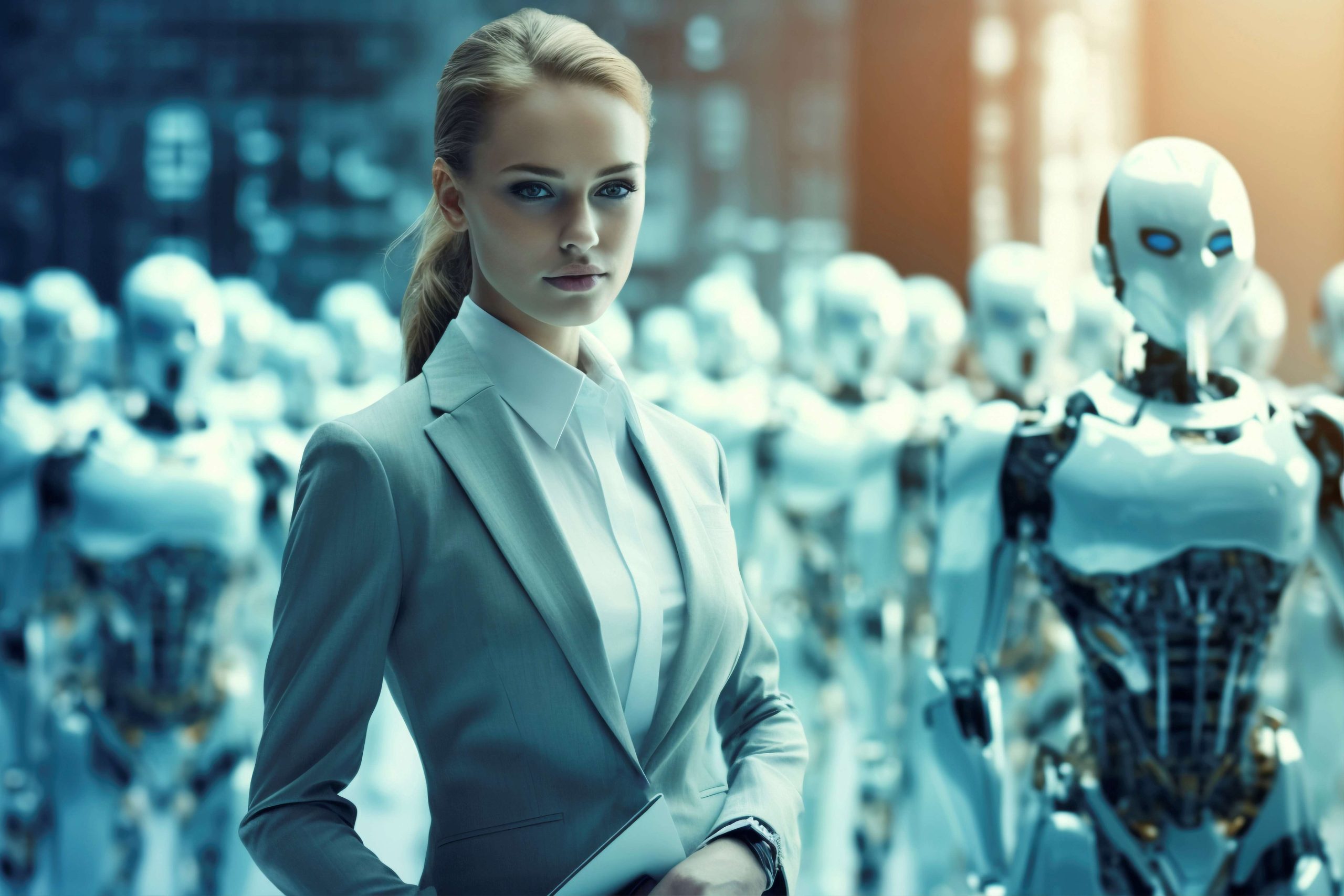

Consider this: Would you get into an autonomous car if nobody knew how it made decisions on the road? AI will be safer and fairer when we understand more and try to solve this black box problem.

Conclusion

One of the most critical challenges facing the world of artificial intelligence is the problem of AI black boxes.

Among key risks of lack of transparency, the trust in the systems of AI, perceived fairness, and ethical issues are at the top.

In such a case, continuous research efforts, explainable AI, simpler models, audits, and better regulations make a future in which AI decisions would become understandable and trustworthy quite plausible.

Understanding the black box problem is one of the first ways to develop superior AI that will be of help in bringing all of us together.

The next time you use any gadget or app powered by AI, just remember—a lot is happening in the background, and the black box is exactly what we need to solve if ever we hope to make AI truly safe and fair.

is this the future that we want?