AI is taking over the world phenomenally. From virtual assistants like Siri and Alexa to AI-powered medical diagnostics, intelligent systems find their places in our day-to-day lives.

Amidst all the benefits which AI shower us with, we will also have to address one fundamental question: what does the use of AI agents ethically imply?

Let me explain these ethical issues in a way

What is an AI Agent?

An AI agent is either a system or software designed to independently perform tasks by learning from data and interacting with its environment.

Whether it’s a chatbot answering your customer service questions, a program that suggests what you will watch next on Netflix, AI agents are everywhere.

But the thing that really makes them both fascinating and sometimes controversial is their ability to “learn” and make decisions that can, at times, approach human thought.

While the development in AI technology may be all so promising, it presents a serious concern for society as a whole due to the significant ethical issues it brings along.

Now, we will delve into these ethical considerations, breaking them down into manageable topics that any mind can comprehend.

Here are 5 examples of AI agents:

- Siri (Apple) – A virtual assistant that uses natural language processing to perform tasks, answer queries, and interact with users based on voice commands.

- Alexa (Amazon) – An AI-powered voice assistant capable of controlling smart home devices, playing music, providing information, and more through user interactions.

- Google Assistant – An AI agent designed to help users by answering questions, setting reminders, and controlling smart devices, utilizing Google’s search and data resources.

- Tesla Autopilot – An AI agent in autonomous vehicles that assists with driving, lane changes, and self-parking, using machine learning to adapt to road conditions and surroundings.

- ChatGPT (OpenAI) – A conversational AI agent designed to generate human-like text, assist with queries, and engage in dialogue, capable of learning from context to improve responses.

1. Privacy and Data Security: Who’s Watching You?

The application of AI and its respect towards our personal data is of great ethical concern. Some numerous AI systems involve huge sets of data that include, among other things, your preference, habitual information, and sometimes even personal details.

But have you ever stopped to think about how much of your data is being amassed and who owns control over it?

Privacy Risks

While searching any AI service—for example, social media platforms or search engines—much more personal information of an individual is given out than one might realize. This begs the question of how data is stored, shared, and even sold.

Data Breach

Whatever advancements are made in AI systems, there is always a way to get into them through hacking. Personal information could be leaked because of security breaches and result in critical identity fraud.

Where there is AI, one needs to balance the benefits against privacy. Should there be a stronger tightening of the reins with regard to the regulation on how companies process your data? That is a debate that will continue.

2. Bias in AI: Are AI Agents Fair?

AI agents learn from data, and here comes another ethical issue: bias. In case the data that feeds an AI system is biased, then the decisions it would produce will be biased too, resulting in certain unfair treatment or discrimination in key sectors like:

Hiring

AI tools sift through job applications all the time. If that data is biased, say, toward men over women, then the hiring practice becomes discriminatory.

Law and Order

Predictive policing AI systems forecast where crimes are most likely to take place. But if those are based on prejudiced data, they could end up targeting entire sections of the community.

The reason is that, for AI agents to be fair, the developers have to be conscious of the data they use. Which now brings about another question: Can AI ever be unbiased?

3. Accountability: Who’s Gonna Take the Blame When AI Messes Up?

AI systems can commit errors, and when they do, it becomes difficult to mention who is responsible. Shall we point the blame to the developer, the user, or artificial intelligence itself?

For instance, if a self-driving car is involved in an accident, where would the responsibility lie: with the car manufacturer, the software engineers, or the AI itself? In such situations, trying to assign responsibility has a lot to do with questions of fairness and justice.

Even more surprisingly, AI systems can make decisions in complex environments which even their creators do not fully understand.

The lack of transparency is what is called the problem of the “black box”—making accountability even harder to obtain. People want to know just exactly and how an AI system decided something, particularly when those decisions impact reality in important ways.

4. Job Displacement: Will AI Take Our Jobs?

Apart from security, another major concern about ethical of AI agents involves its relation to employment. Many people fear that AI will take over all human jobs in manufacturing, transportation, and even healthcare.

Automation

AI machines and robots can do tasks faster and with greater efficiency compared to human beings, which creates apprehensions of the replacement of human employment.

New Opportunities

AI can also create employment. For instance, in the development, maintenance, and improvement of AI systems, skilled persons are invariably required. Whether these new jobs will or will not offset the ones lost is a question yet to be answered.

But above all, it would be important that society prepare for this, focusing on retraining and education. Are we ready to adapt to living in a world where machines can do much of our work?

5. Ethical Decision-Making: Can AI Understand Right from Wrong?

AI systems do an excellent job in decision-making with accuracy over any data, but is it capable of understanding ethics? Actually, AI doesn’t have the emotional intelligence or moral reasoning that a human does. This raises questions about ethics in decisions.

Military Use

Many countries are building AI-powered weapons. Machines deciding on life and death raise huge ethical concerns. Can AI differentiate between combatants and civilians in a war zone?

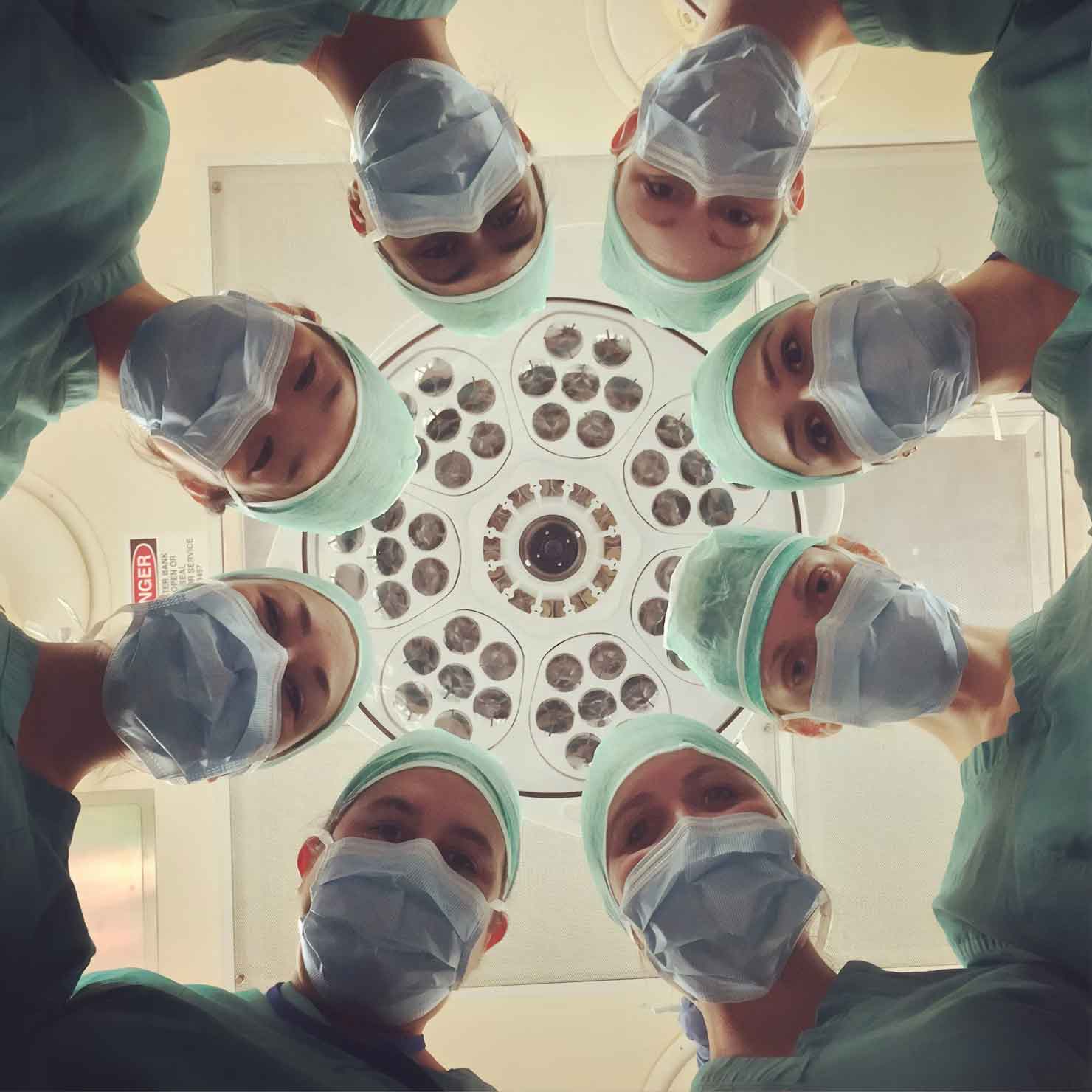

Healthcare

AI assists in medical diagnosis. But can we really entrust it with ethical decisions when human lives are involved?

After all, it is humans who need to set limits and guidelines of ethics that AI operates within to make sure these systems mirror our moral values.

6. Transparency and Control: Do We Have a Say?

One of the main ethical issues is transparency—how much control does each user have over AI systems they work and interact with?

In using AI systems, people have the right to know the decisions reached and why. Imagine dealing with an AI agent that suggests recommendations regarding your health, financial situation, or even legal rights without your understanding of how it comes up with such decisions.

Lack of transparency can be taken advantage of for manipulative or exploitative ends.

In developing the ethics of usages for AI, developers are supposed to build systems that not only will do their jobs but will also be transparent and comprehensible to the user. People should be given the opportunity to question, object to decisions, or even opt out if it makes them uncomfortable.

Final Thoughts: The Road Ahead

AI agents have enormous potential to improve our lives. While AI agents introduce grave ethical challenges simultaneously, from privacy to bias, job displacement to accountability—all need to be negotiated with much caution.

Time has come that at this pace of development of AI, individuals, companies, and governments should collaboratively take steps to ensure that AI is developed and used responsibly and ethically.

So, what do you think? Are we ready to embrace AI, or do we need to slow down and address these concerns first? These ethical considerations form the bedrock on which every discussion about AI technology needs to occur from now onward.